ChatGPT powered 3-band equalizer

A couple of weeks ago, back in October, the nice people of Automotive Grade Linux asked me if it would be possible to add some “bass” and “treble” controls on the audio output of the AGL In-Vehicle Infotainment demo image, using PipeWire. I knew, of course, that it’s perfectly doable using filters and I knew that PipeWire ships with the filter-chain module, which can do wonders in that regard. But what I didn’t know was how to configure it exactly for this particular job. To figure this out, I embarked on my first ChatGPT coding adventure…

- Chapter 1: Configuring PipeWire

- Chapter 2: Asking ChatGPT for help

- Chapter 3: Manual refinement

- Chapter 4: The final result

Chapter 1: Configuring PipeWire

To begin with, I had to start from PipeWire’s documentation. Reading through the documentation, I quickly found that the biquads built-in filter of module-filter-chain would be suitable for this job. The idea was to create curves that amplify or suppress specific ranges of frequencies. Grouping low and high frequencies in two separate curves would then create a “bass” and a “treble” control, respectively. This is also how multiband equalizers are implemented, but with more groups.

Having no prior experience to module-filter-chain and equalizer implementations in general, I had to test this first. Using the biquads designer, which is linked from the documentation, I started by building a configuration file that has 3 biquads “peaking” filters, i.e. curves that have a peak on a specific frequency. My thinking was, let’s create a “bass”, “mid” and “treble” equalizer first! This configuration wasn’t entirely correct on first attempt, but that is besides the point.

This is what it looked like:

# File: "sink-eq3.conf"

# 3 band sink equalizer

#

# Copy this file into a filter-chain.conf.d/ directory such as

# ~/.config/pipewire/filter-chain.conf.d/

#

# and run with: pipewire -c filter-chain.conf

#

context.modules = [

{ name = libpipewire-module-filter-chain

args = {

node.description = "Equalizer Sink"

media.name = "Equalizer Sink"

filter.graph = {

nodes = [

{

type = builtin

name = eq_band_1

label = bq_peaking

control = { "Freq" = 100.0, "Q" = 1.0, "Gain" = 0.0 }

}

{

type = builtin

name = eq_band_2

label = bq_peaking

control = { "Freq" = 440.0, "Q" = 1.0, "Gain" = 0.0 }

}

{

type = builtin

name = eq_band_3

label = bq_peaking

control = { "Freq" = 1000.0, "Q" = 1.0, "Gain" = 0.0 }

}

]

links = [

{ output = "eq_band_1:Out", input = "eq_band_2:In" }

{ output = "eq_band_2:Out", input = "eq_band_3:In" }

]

}

audio.channels = 2

audio.position = [ FL, FR ]

capture.props = {

node.name = "effect_input.eq3"

media.class = Audio/Sink

}

playback.props = {

node.name = "effect_output.eq3"

node.passive = true

}

}

}

]

After building and loading this configuration into PipeWire, I ended up with two more nodes in my graph: a virtual sink and a playback stream. This is how all filters and loopbacks are normally presented, as two interconnected streams where the first consumes input and the second produces output. I linked the playback stream to the actual audio sink and made the virtual sink the default sink, so that I could play some music through it. I started the music and it was correctly linked, but it sounded normal. Now there was this big question in my head: how do I test the equalizer?

Obviously, I needed to change some controls. In particular, the “Gain” of each

peaking filter. I looked at the output of pw-dump for each of these two nodes

that belonged to the filter-chain and I noticed that the input node (the virtual

sink) had a list of controls available under the Props param that looked like

this:

$ pw-dump effect_input.eq3

[

{

"id": 218,

"type": "PipeWire:Interface:Node",

"version": 3,

"permissions": [ "r", "w", "x", "m" ],

"info": {

"max-input-ports": 65,

"max-output-ports": 0,

"change-mask": [ "input-ports", "output-ports", "state", "props", "params" ],

"n-input-ports": 2,

"n-output-ports": 2,

"state": "suspended",

"error": null,

"props": {

...

},

"params": {

...

"Props": [

{

"volume": 1.000000,

"mute": false,

"channelVolumes": [ 1.000000, 1.000000 ],

"channelMap": [ "FL", "FR" ],

"softMute": false,

"softVolumes": [ 1.000000, 1.000000 ],

"monitorMute": false,

"monitorVolumes": [ 1.000000, 1.000000 ],

"params": [

...

]

},

{

"params": [

"eq_band_1:Freq",

100.000000,

"eq_band_1:Q",

1.000000,

"eq_band_1:Gain",

0.000000,

"eq_band_1:b0",

1.000000,

"eq_band_1:b1",

0.000000,

"eq_band_1:b2",

0.000000,

"eq_band_1:a0",

1.000000,

"eq_band_1:a1",

0.000000,

"eq_band_1:a2",

0.000000,

"eq_band_2:Freq",

440.000000,

"eq_band_2:Q",

1.000000,

"eq_band_2:Gain",

0.000000,

"eq_band_2:b0",

1.000000,

"eq_band_2:b1",

0.000000,

"eq_band_2:b2",

0.000000,

"eq_band_2:a0",

1.000000,

"eq_band_2:a1",

0.000000,

"eq_band_2:a2",

0.000000,

"eq_band_3:Freq",

1000.000000,

"eq_band_3:Q",

1.000000,

"eq_band_3:Gain",

0.000000,

"eq_band_3:b0",

1.000000,

"eq_band_3:b1",

0.000000,

"eq_band_3:b2",

0.000000,

"eq_band_3:a0",

1.000000,

"eq_band_3:a1",

0.000000,

"eq_band_3:a2",

0.000000

]

}

],

...

}

}

}

]

Note that I have redacted the output of pw-dump to only show the relevant

parts, for readability. The original output is a bit too long and maybe hard to

read at first, but actually it’s not that complicated. Remember that the Props

parameter is the place where such dynamic controls are always present.

Now with that in mind, I needed to figure out how to change the value of the

eq_band_1:Gain control. I knew that pw-cli can be used to change these kinds

of parameters, but I had to refresh my memory on the syntax. Eventually, I

figured out that I could do this:

$ pw-cli s 218 Props '{ "params": [ "eq_band_1:Gain", 1.0 ] }'

Fascinating! I could now change the gain of the first peaking filter and hear the difference. But that seemed kind of tedious and complicated to do repetitively for all the bands in order to test different configurations. I wanted to have an easy to use slider to change all these controls easily. I needed a GUI!

But writing a GUI is an even more tedious process… I know from experience. So I thought, I bet ChatGPT can do this for me! So many people have been reporting using it for all sorts of things, why not try it myself? And so I did…

Chapter 2: Asking ChatGPT for help

I started a new chat and prompted ChatGPT with the following:

I want to create a graphical user interface application for the Linux desktop, which will have 1 window presenting a 3 band equalizer. The equalizer backend is implemented in a separate service that communicates with this GUI using JSON

My thinking behind this prompt was that I wanted it to write the code for the

GUI first and leave the PipeWire communication part for later. I thought that

if I can get it to communicate with some JSON, I could then plug pw-dump and

pw-cli in there and it would work.

The AI chatbot responded with detailed instructions on how to write a GUI with python and GTK, which packages to install, etc, and what code to try. The code looked like this:

import gi

gi.require_version('Gtk', '3.0')

from gi.repository import Gtk

import json

class EqualizerApp(Gtk.Window):

def __init__(self):

Gtk.Window.__init__(self, title="3 Band Equalizer")

self.set_default_size(400, 200)

self.grid = Gtk.Grid()

self.add(self.grid)

self.slider1 = Gtk.Scale.new_with_range(Gtk.Orientation.HORIZONTAL, 0, 100, 1)

self.slider1.set_value(50)

self.slider1.connect("value-changed", self.on_slider1_changed)

self.grid.attach(self.slider1, 0, 0, 1, 1)

self.slider2 = Gtk.Scale.new_with_range(Gtk.Orientation.HORIZONTAL, 0, 100, 1)

self.slider2.set_value(50)

self.slider2.connect("value-changed", self.on_slider2_changed)

self.grid.attach(self.slider2, 0, 1, 1, 1)

self.slider3 = Gtk.Scale.new_with_range(Gtk.Orientation.HORIZONTAL, 0, 100, 1)

self.slider3.set_value(50)

self.slider3.connect("value-changed", self.on_slider3_changed)

self.grid.attach(self.slider3, 0, 2, 1, 1)

def on_slider1_changed(self, widget):

value = self.slider1.get_value()

# Send this value to the backend service using JSON

def on_slider2_changed(self, widget):

value = self.slider2.get_value()

# Send this value to the backend service using JSON

def on_slider3_changed(self, widget):

value = self.slider3.get_value()

# Send this value to the backend service using JSON

win = EqualizerApp()

win.connect("destroy", Gtk.main_quit)

win.show_all()

Gtk.main()

Interesting! I tried to run this and it worked, but there was something wrong with the window size and resizing it didn’t resize the sliders. So, I asked for refinement:

I need the sliders to expand and fill the whole surface of the window

ChatGPT responded with a new code snippet that looked like this:

import gi

gi.require_version('Gtk', '3.0')

from gi.repository import Gtk

class EqualizerApp(Gtk.Window):

def __init__(self):

Gtk.Window.__init__(self, title="3 Band Equalizer")

self.set_default_size(400, 200)

self.box = Gtk.Box(orientation=Gtk.Orientation.VERTICAL, spacing=6)

self.add(self.box)

self.slider1 = Gtk.Scale.new_with_range(Gtk.Orientation.HORIZONTAL, 0, 100, 1)

self.slider1.set_value(50)

self.slider1.connect("value-changed", self.on_slider1_changed)

self.box.pack_start(self.slider1, True, True, 0)

self.slider2 = Gtk.Scale.new_with_range(Gtk.Orientation.HORIZONTAL, 0, 100, 1)

self.slider2.set_value(50)

self.slider2.connect("value-changed", self.on_slider2_changed)

self.box.pack_start(self.slider2, True, True, 0)

self.slider3 = Gtk.Scale.new_with_range(Gtk.Orientation.HORIZONTAL, 0, 100, 1)

self.slider3.set_value(50)

self.slider3.connect("value-changed", self.on_slider3_changed)

self.box.pack_start(self.slider3, True, True, 0)

def on_slider1_changed(self, widget):

value = self.slider1.get_value()

# Send this value to the backend service using JSON

def on_slider2_changed(self, widget):

value = self.slider2.get_value()

# Send this value to the backend service using JSON

def on_slider3_changed(self, widget):

value = self.slider3.get_value()

# Send this value to the backend service using JSON

win = EqualizerApp()

win.connect("destroy", Gtk.main_quit)

win.show_all()

Gtk.main()

That was better! It added a Gtk.Box container, which did the trick. Now it

was time to worry about the JSON communication. I wasn’t sure how to do that,

but I knew that I needed the app to run pw-dump and then parse the output

to get the initial values of the controls. So I prompted the following:

That’s better. Now, I want this program to take as a command-line argument an integer number, which will be referred to as the “node id”. When the program starts, it should run the command

pw-dump <node id>and expect to receive in its stdout stream a JSON object that looks like this:

And below that prompt, I pasted a redacted version of the pw-dump output,

which included the Props and PropInfo objects as-is, but I removed things

like the properties and other types of params, which were irrelevant. I also

added some further instructions below the pasted output:

It should then set the initial values of the sliders based on the Gain values of the eq bands 1, 2, and 3, respectively. The Gain value range is between -20.0 and 20.0 and is expressed in dB.

Et voilà!

import gi

import subprocess

gi.require_version('Gtk', '3.0')

from gi.repository import Gtk

import json

class EqualizerApp(Gtk.Window):

def __init__(self, node_id):

Gtk.Window.__init__(self, title="3 Band Equalizer")

self.set_default_size(400, 200)

self.box = Gtk.Box(orientation=Gtk.Orientation.VERTICAL, spacing=6)

self.add(self.box)

self.sliders = []

try:

result = subprocess.run(['pw-dump', str(node_id)], capture_output=True, text=True, check=True)

output = result.stdout

data = json.loads(output)

props = data[0]["info"]["params"]["Props"][1]["params"]

for i in range(3):

gain = props[4 + 8 * i] # extracting gain values from the JSON

slider = Gtk.Scale.new_with_range(Gtk.Orientation.HORIZONTAL, -20.0, 20.0, 0.1) # range set from -20 to 20

slider.set_value(gain)

self.sliders.append(slider)

self.box.pack_start(slider, True, True, 0)

except subprocess.CalledProcessError as e:

print(f"Error: {e}")

win = EqualizerApp(1) # 1 is the node ID; you can replace it with the desired ID

win.connect("destroy", Gtk.main_quit)

win.show_all()

Gtk.main()

It seemed to actually understand how to parse the output and get the values. Unfortunately it disregarded the instruction about the command line argument, but I was happy to ignore that for the moment. Now I needed to make it also send the values back to PipeWire. So I asked:

That’s great! Now, when the sliders are moved, I want the program to execute the command

pw-cli s <node id> Props '{ "params": [ "eq_band_<band number>:Gain", <value> ] }'to set the value for the respective band

Note how I templated the command in a way that is understandable by a human, but not necessarily parsable by a machine. I was curious to see how much of it the AI chatbot would understand. And it did understand it! It responded with:

import gi

import subprocess

gi.require_version('Gtk', '3.0')

from gi.repository import Gtk

import json

class EqualizerApp(Gtk.Window):

def __init__(self, node_id):

Gtk.Window.__init__(self, title="3 Band Equalizer")

self.set_default_size(400, 200)

self.box = Gtk.Box(orientation=Gtk.Orientation.VERTICAL, spacing=6)

self.add(self.box)

self.sliders = []

try:

result = subprocess.run(['pw-dump', str(node_id)], capture_output=True, text=True, check=True)

output = result.stdout

data = json.loads(output)

props = data[0]["info"]["params"]["Props"][1]["params"]

for i in range(3):

gain = props[4 + 8 * i] # extracting gain values from the JSON

slider = Gtk.Scale.new_with_range(Gtk.Orientation.HORIZONTAL, -20.0, 20.0, 0.1) # range set from -20 to 20

slider.set_value(gain)

slider.connect("value-changed", self.on_slider_moved, node_id, i + 1)

self.sliders.append(slider)

self.box.pack_start(slider, True, True, 0)

except subprocess.CalledProcessError as e:

print(f"Error: {e}")

def on_slider_moved(self, widget, node_id, band_number):

value = widget.get_value()

command = f'pw-cli s {node_id} Props \'{{ "params": [ "eq_band_{band_number}:Gain", {value} ] }}\''

try:

subprocess.run(command, shell=True, check=True)

except subprocess.CalledProcessError as e:

print(f"Error: {e}")

win = EqualizerApp(1) # 1 is the node ID; you can replace it with the desired ID

win.connect("destroy", Gtk.main_quit)

win.show_all()

Gtk.main()

Impressive! Next step was for me to run this and see what happens. Unfortunately, it initially crashed. I didn’t even look at the error, I just copy-pasted it from the terminal into the chat:

Traceback (most recent call last): File "/home/gkiagia/Code/gptequalizer/equalizer.py", line 43, in <module> win = EqualizerApp(65) # 1 is the node ID; you can replace it with the desired ID ^^^^^^^^^^^^^^^^ File "/home/gkiagia/Code/gptequalizer/equalizer.py", line 27, in __init__ slider.set_value(gain) TypeError: Must be number, not str

ChatGPT responded with an explanation of the error and instructions on how to fix it, along with the fixed code:

import gi

import subprocess

gi.require_version('Gtk', '3.0')

from gi.repository import Gtk

import json

class EqualizerApp(Gtk.Window):

def __init__(self, node_id):

Gtk.Window.__init__(self, title="3 Band Equalizer")

self.set_default_size(400, 200)

self.box = Gtk.Box(orientation=Gtk.Orientation.VERTICAL, spacing=6)

self.add(self.box)

self.sliders = []

try:

result = subprocess.run(['pw-dump', str(node_id)], capture_output=True, text=True, check=True)

output = result.stdout

data = json.loads(output)

props = data[0]["info"]["params"]["Props"][1]["params"]

for i in range(3):

gain = float(props[4 + 8 * i]) # Convert to float

slider = Gtk.Scale.new_with_range(Gtk.Orientation.HORIZONTAL, -20.0, 20.0, 0.1)

slider.set_value(gain)

slider.connect("value-changed", self.on_slider_moved, node_id, i + 1)

self.sliders.append(slider)

self.box.pack_start(slider, True, True, 0)

except subprocess.CalledProcessError as e:

print(f"Error: {e}")

def on_slider_moved(self, widget, node_id, band_number):

value = widget.get_value()

command = f'pw-cli s {node_id} Props \'{{ "params": [ "eq_band_{band_number}:Gain", {value} ] }}\''

try:

subprocess.run(command, shell=True, check=True)

except subprocess.CalledProcessError as e:

print(f"Error: {e}")

win = EqualizerApp(65) # 65 is the node ID; you can replace it with the desired ID

win.connect("destroy", Gtk.main_quit)

win.show_all()

Gtk.main()

Now it was time to go back and insist on a command line argument, because hardcoding random IDs didn’t seem like a good idea. So I asked:

I would like the node ID to be passed in the program via a command line argument

And it responded:

import gi

import subprocess

import argparse

gi.require_version('Gtk', '3.0')

from gi.repository import Gtk

import json

class EqualizerApp(Gtk.Window):

def __init__(self, node_id):

Gtk.Window.__init__(self, title="3 Band Equalizer")

self.set_default_size(400, 200)

self.box = Gtk.Box(orientation=Gtk.Orientation.VERTICAL, spacing=6)

self.add(self.box)

self.sliders = []

try:

result = subprocess.run(['pw-dump', str(node_id)], capture_output=True, text=True, check=True)

output = result.stdout

data = json.loads(output)

props = data[0]["info"]["params"]["Props"][1]["params"]

for i in range(3):

gain = float(props[4 + 8 * i]) # Convert to float

slider = Gtk.Scale.new_with_range(Gtk.Orientation.HORIZONTAL, -20.0, 20.0, 0.1)

slider.set_value(gain)

slider.connect("value-changed", self.on_slider_moved, node_id, i + 1)

self.sliders.append(slider)

self.box.pack_start(slider, True, True, 0)

except subprocess.CalledProcessError as e:

print(f"Error: {e}")

def on_slider_moved(self, widget, node_id, band_number):

value = widget.get_value()

command = f'pw-cli s {node_id} Props \'{{ "params": [ "eq_band_{band_number}:Gain", {value} ] }}\''

try:

subprocess.run(command, shell=True, check=True)

except subprocess.CalledProcessError as e:

print(f"Error: {e}")

def parse_arguments():

parser = argparse.ArgumentParser(description='3 Band Equalizer')

parser.add_argument('node_id', type=int, help='Node ID')

return parser.parse_args()

args = parse_arguments()

win = EqualizerApp(args.node_id)

win.connect("destroy", Gtk.main_quit)

win.show_all()

Gtk.main()

And it worked! I could now run the program with a command line argument. But it still did not work as expected. There seemed to be a problem with retrieving the initial values of the sliders.

Chapter 3: Manual refinement

At this point, I thought it didn’t make any sense to try and ask for a refinement, since I understood the problem myself and it seemed easy to fix manually.

The problem was that due to the strange nature of the “params” array in the JSON, the bot had not completely understood the pattern. Normally, this wouldn’t have been an array in the first place, since it’s a dictionary of keys and values. It’s supposed to be a JSON object, but it’s not. Look again:

"params": [

"eq_band_1:Freq",

100.000000,

"eq_band_1:Q",

1.000000,

"eq_band_1:Gain",

0.000000,

"eq_band_1:b0",

1.000000,

"eq_band_1:b1",

0.000000,

"eq_band_1:b2",

0.000000,

"eq_band_1:a0",

1.000000,

"eq_band_1:a1",

0.000000,

"eq_band_1:a2",

0.000000,

"eq_band_2:Freq",

440.000000,

"eq_band_2:Q",

1.000000,

"eq_band_2:Gain",

0.000000,

ChatGPT tried to get it by using some hardcoded values to index this array:

gain = float(props[4 + 8 * i])

The values are not totally random. There seem to be 8 keys between the first and the second Gain key and the first Gain key is the 4th element of the array… But that’s not what we want. It does not take into account the fact that keys and values are different elements of the array. The first Gain value is actually at index 5 and the jump between the Gain values is 18, so the code should have been:

gain = float(props[5 + 18 * i])

After fixing that, it worked! I could now move the sliders and hear the difference in the sound.

There was still one small problem, though, that has to do with how pw-dump

interprets its command line arguments. It expects an argument which can be

either an object ID or an object serial or a name (node name, metadata name, etc).

Now, when we pass an ID, the same number may also be interpreted as a serial

and the output will contain all the matching objects. The problem is that

the parser in the python code expects to find the gain values in the first

object of the output, but this may not be the case. So, I added a simple loop

to try and find the first object that has these params. Instead of:

props = data[0]["info"]["params"]["Props"][1]["params"]

I wrote this:

for i in range(10):

try:

props = data[i]["info"]["params"]["Props"][1]["params"]

break

except KeyError:

continue

I could have probably asked ChatGPT to fix this for me, and it would probably have come up with a more clever solution, but it was very low hanging fruit for me to fix it myself and I didn’t want to bother writing a prompt for it. It felt like it would have taken more time to write the prompt than to fix it.

Chapter 4: The final result

You can find the final code here

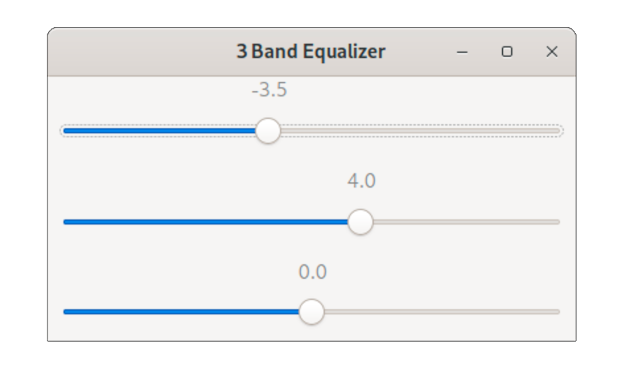

And this is how it looks like:

I hope you enjoyed this story! I certainly did. I think it’s amazing how easy it is to write proof of concept code using generative AI. I know this would have taken me at least an entire afternoon to write on my own, because I would have had to look up the documentation of GTK, figure out how to parse JSON, how to run a command and parse its output, etc. Let alone that I wouldn’t have done this in python, most likely, since I am not very familiar with it really. But with ChatGPT, it took me less than an hour to get to a working prototype. And I didn’t even have to look up anything or refresh my python skills! I just had to write a few prompts and copy-paste the code that was generated for me. I think this is a very powerful tool and I’m looking forward to using it more in the future.